The relentless march of Artificial Intelligence is no longer a distant forecast; it is a seismic shift fundamentally reshaping the digital landscape. For the data centre industry, this represents more than just a new wave of demand. It is a paradigm-altering event that introduces a new set of rules for design, operations, and investment strategy. The AI customer is a different breed, with an insatiable and complex set of demands. Operators who aim to win this new generation of tenants must look beyond simply offering more space and power; they must evolve their approach to meet a more sophisticated set of requirements.

Attracting and retaining AI clients requires a strategic pivot in what a data centre is and does. It demands a move from being a passive landlord of digital real estate to becoming a strategic partner, armed with the intelligence to anticipate needs before they arise. The new mandate for data centres is clear: evolve around the principles of extreme power density, revolutionary cooling, strategic connectivity, and most importantly, data-driven foresight. The operators who thrive in this new era will be those who understand these principles and build their strategy around them, capturing the immense opportunity the AI boom presents.

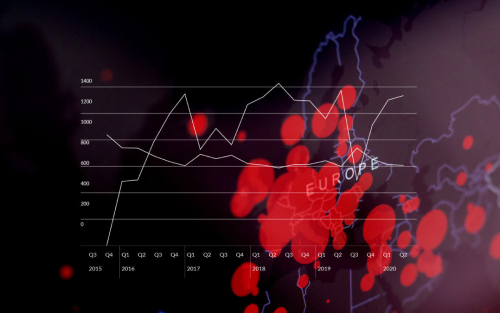

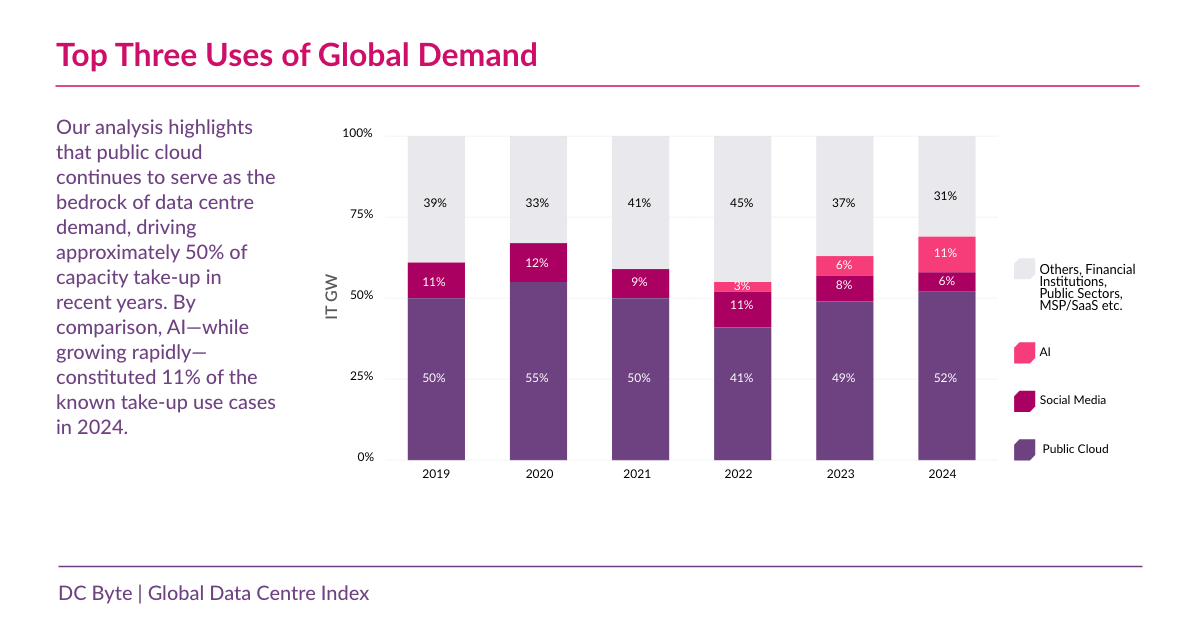

AI workloads are now one of the fastest-growing drivers of global data centre demand, nearly doubling in share over the past year across key markets around the world. Source: DC Byte Global Data Centre Report 2025.

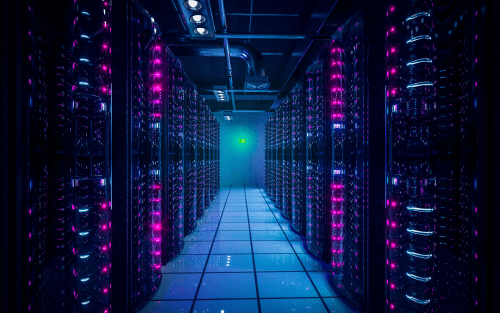

The New AI Tenant: A Power-Hungry, Density-Driven Beast

Until recently, power densities increased gradually, with the standard data centre rack consuming power in the range of 5-10 kW. This was a predictable, manageable load. The AI revolution, powered by clusters of thousands of GPUs, has shattered this standard. According to industry analysis, a single AI training rack can now demand upwards of 130kW.

This isn’t just a quantitative leap; it’s a qualitative one that breaks traditional infrastructure. According to DC Byte analysts, even in Asia Pacific markets, which are traditionally seen as lagging in AI adoption, AI deployments are now driving typical rack densities well above the 40kW range.

The hardware itself drives this extreme density. An NVIDIA H100 Tensor Core GPU, a workhorse of the current AI boom, can consume over 700 watts on its own. A single server rack packed with these processors becomes a supercomputer in its own right, generating an immense amount of processing power and, consequently, a colossal amount of heat in a very small footprint. As Schneider Electric notes in its research, traditional air cooling methods become inefficient and ultimately ineffective once rack densities surpass the 30 kW mark. This single fact has profound implications for the entire data centre ecosystem. An AI company isn’t just looking for space; it’s looking for a highly specialised environment engineered to handle these extreme loads.

The Power Imperative: Beyond the Megawatt

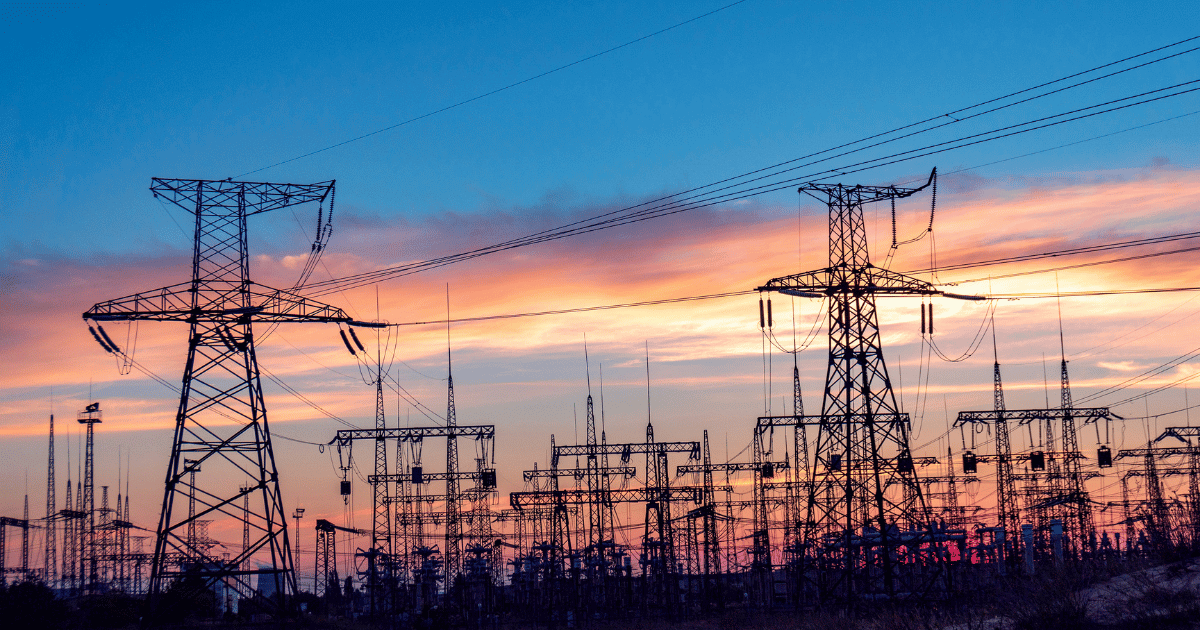

The most immediate and pressing challenge is power. The scale of AI’s energy consumption is staggering. The International Energy Agency (IEA) projects that global data centre electricity consumption could surpass 1,000 terawatt-hours by 2026, which is roughly equivalent to the entire electricity consumption of Japan. DC Byte research suggests that this number will further increase to over 1,700 terawatt-hours before the end of the decade. A significant portion of this growth is attributed directly to AI.

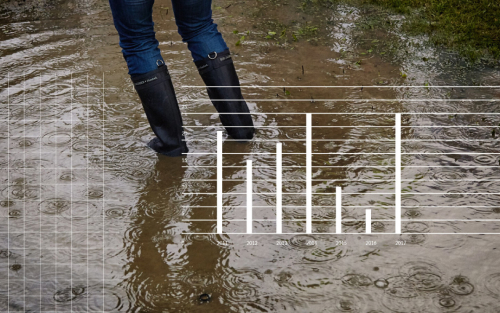

However, the problem for data centre operators is far more complex than simply securing a large power contract. The real challenge lies in the logistics of power delivery. In major data centre hubs like Northern Virginia or Dublin, the headlines are already filled with stories of power constraints and moratoriums on new grid connections. It can take years to get new high-voltage substations approved and built, creating a massive bottleneck that stalls development.

According to DC Byte’s Virginia analyst, Lilli Flynn, transmission constraints are especially acute in Loudoun County, Virginia, where Dominion Energy is working to expand capacity. In other U.S. regions like Arizona, California, and Nevada, grid-related challenges have also slowed or scaled down large-scale projects. In Arizona alone, projected timelines for new substations are being pushed back by up to two years, according to utility planning data.

These delays aren’t exclusive to AI builds, but they paint a clear picture: the availability of scalable, deliverable power is becoming the ultimate constraint on data centre growth.

“Power delivery is no longer a future concern. It’s a present-day gatekeeper,” says Alexandra Desseyn, Americas Research Manager at DC Byte. “From Loudoun County to Arizona, we’re seeing utilities struggle to keep pace with demand. Transmission bottlenecks, delayed substations, and shifting regulatory landscapes are forcing operators to rethink location strategies. Land and funding are no longer enough. Access to scalable, near-term power has become the new currency of growth.”

This is where the game is won or lost. An AI company looking to deploy a new training cluster cannot afford to wait two years for a substation to come online. They need to know now which markets, and which specific sites within those markets, have immediately available and scalable power. They need to understand the local regulatory environment, the stability of the grid, and the potential for future renewable energy integration (for instance, in Finland). Simply knowing a market has a certain number of megawatts in its “pipeline” is no longer sufficient. The critical question has become: how much of that power is actually deliverable today?

The Cooling Revolution: Liquid is the New Air

With extreme power density comes extreme heat. Forcing more cold air into a hot aisle is a strategy with rapidly diminishing returns when dealing with 100 kW racks. The laws of thermodynamics are unforgiving, and the industry is rapidly pivoting to the only viable solution: liquid cooling.

Technologies like direct-to-chip (D2C) cooling, where liquid is piped directly to a cold plate on top of the GPU, are becoming the new standard for high-performance computing. More advanced methods, such as full immersion cooling, where entire servers are submerged in a non-conductive dielectric fluid, are moving from niche applications to mainstream consideration. DC Byte’s analyst, Olivia Ford, highlights that Digital Realty plans to support liquid cooling in its upcoming facilities in Amsterdam. And it’s not just operators making the shift. Microsoft announced in its 2025 Environmental Sustainability Report that all of its self-built data centres will transition to direct-to-chip cooling designs. Together, these moves show that the world’s largest data centre REITs and cloud hyperscalers are preparing to adopt liquid cooling technologies at scale.

This shift has massive implications for data centre design and investment. Retrofitting a traditional, air-cooled facility for liquid cooling is a complex and expensive undertaking, involving extensive new plumbing, heat exchangers, and cooling distribution units. For many operators, the only viable path is to design and build new, purpose-built “AI-ready” facilities from the ground up. This requires a deep understanding of the cooling requirements of specific AI hardware and the ability to design a flexible system that can accommodate future generations of even more powerful processors.

The Intelligence Layer: Why Guessing is No Longer an Option

In this high-stakes environment of power constraints, complex cooling designs, and multi-billion-dollar investments, the old way of making decisions is dead. Relying on outdated quarterly reports or anecdotal broker information is akin to navigating a minefield with a hand-drawn map. To succeed, data centre operators must arm themselves with real-time, verified intelligence.

“The AI boom is fundamentally changing the due diligence process for operators and their investors,” says Edward Galvin, Founder of DC Byte.

“Previously, the key questions were about colocation pricing or fibre availability. Now, the conversation is about verifying power claims, the availability of specific cooling technologies, and the true state of development of competing facilities. You cannot get this information from a press release or a static report. It requires constant, on-the-ground primary research. The operators who can provide prospective AI customers with objective, granular real-time intelligence are not just selling colocation; they are selling certainty, and in this market, certainty is the most valuable commodity there is.”

Galvin’s point underscores the critical need for a new layer of intelligence. An AI company evaluating a potential site needs to know more than just the advertised power capacity. They need to know if the local utility has approved the grid connection. They need to know if the facility’s cooling system is compatible with their specific hardware. They need to know if the construction timeline for a new data hall is on track or facing delays.

This is the level of detail that separates a successful AI hosting strategy from a catastrophic one. It requires a platform built on a foundation of primary research, using data gathered not from scraping websites but from building relationships with local authorities, utility companies, and construction crews.

The AI-Ready Data Centre: A New Partnership

The data centre of the future, built for the AI customer of today, is more than just a building. It is a highly engineered, deeply intelligent ecosystem. It is a facility with a flexible and robust power architecture, a forward-thinking liquid cooling strategy, and the high-bandwidth connectivity needed to manage immense datasets.

But beyond the physical infrastructure, the successful data centre operator of the AI era must become a strategic partner. They must be able to provide their customers with the verified, real-time data needed to make critical deployment decisions with confidence. They must understand the nuances of regional power grids, the specific requirements of different AI workloads, and the competitive landscape as it exists today, not as it was six months ago.

The AI revolution is a challenge, but it is also the single greatest opportunity the data centre industry has ever seen. The operators who thrive will be those who recognise that they are no longer just in the business of providing space and power. They are in the business of providing intelligence.

The DC Byte Advantage: From Data to Decision

Navigating this complex new landscape requires more than just data; it requires intelligence. This is the core principle upon which DC Byte was founded. Our platform is built on a foundation of unrivalled primary research, with a global team of analysts dedicated to verifying every data point on the ground. We don’t report on market trends; we uncover them.

For businesses and operators looking to capture the AI opportunity, this means you can:

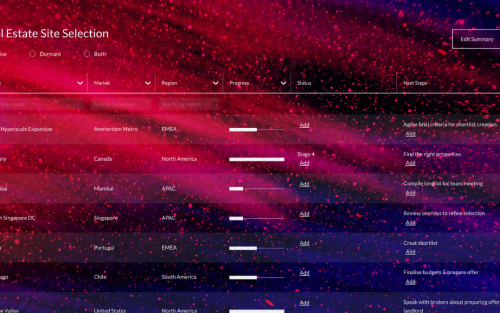

- Make Decisions with Confidence: Our real-time intelligence on power availability, construction status, and cooling technologies removes the guesswork from site selection and investment.

- Outmanoeuvre the Competition: By understanding the true state of the market, you can identify opportunities and mitigate risks before they become common knowledge.

- Leverage Expert Analysis: The power of our platform is amplified by the power of our people. Our clients work directly with our analysts, gaining access to bespoke insights and strategic advice that turn our data into their competitive advantage.

In the AI era, the most valuable asset is certainty. DC Byte provides the intelligence to achieve it.

Get in touch with our team to learn how we can support your next move.