The AI Imperative Reshaping Cloud

Artificial intelligence is reshaping how we build and scale cloud infrastructure, and that transition is accelerating fast. Generative models, foundation models, and other AI workloads demand GPU scale, ultra-low latency, and cost structures that traditional hyperscale clouds often struggle to deliver.

According to Kristina Lesnjak, EMEA Research Manager, “AI is rapidly reshaping the foundations of cloud infrastructure, driving demand for GPU scale and ultra-low latency that neoclouds are uniquely positioned to deliver. With record levels of investment flowing into the UK’s digital infrastructure and leading technology firms aligning around AI, the momentum behind this transformation has never been stronger.”

Defining Neoclouds: What Sets Them Apart

Neoclouds are emerging as a distinct category of cloud providers built specifically for AI. Their core attributes include GPU-as-a-service models, ultra-low-latency networking, and software stacks optimised for large-scale AI workloads. Unlike traditional infrastructure-as-a-service or platform-as-a-service offerings, neoclouds provide capabilities tailored to AI, from training and inference to fine-tuning and full model hosting.

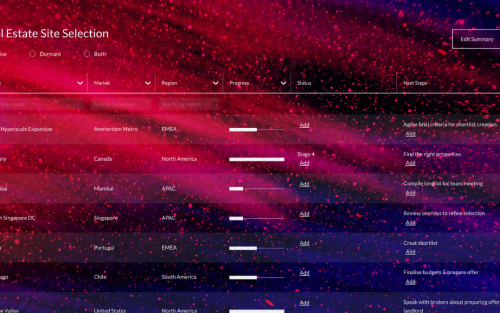

Global and European players are already establishing themselves in this space, bringing new competition to hyperscalers and regional providers. For the wider ecosystem, the implications are clear. Neoclouds introduce new types of tenants, push facilities toward higher-density requirements, and require earlier supplier engagement than conventional cloud builds.

Key Drivers: What AI Is Asking of Infrastructure

AI workloads are rewriting infrastructure requirements. Instead of small, distributed deployments, AI training calls for concentrated sites with thousands of GPUs working in parallel. This shift is creating new design and operational challenges that ripple across the ecosystem.

Scale and density: thousands of GPUs concentrated per site

AI training requires thousands of GPUs working in parallel, which means infrastructure is moving from distributed nodes to extremely dense, centralised sites. Facilities are now being designed to host much higher rack densities than traditional enterprise or cloud deployments, stretching space planning and site selection to their limits.

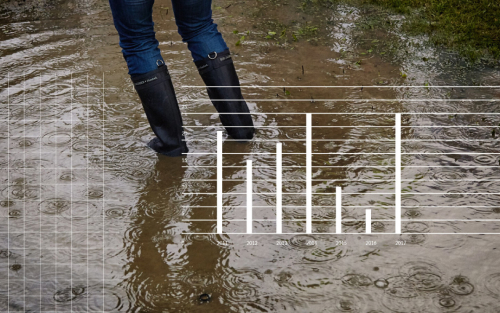

Power and cooling requirements: how design is becoming a limiting factor

The power draw of GPU clusters is immense, and traditional cooling systems often cannot keep pace. Operators are increasingly exploring advanced solutions such as liquid or immersion cooling. At the same time, access to grid capacity is becoming one of the most decisive factors in where AI-ready facilities can be built.

Storage and I/O architectures optimised for rapid training and inference

AI workloads generate vast volumes of data, which must be stored and transferred at speed to avoid bottlenecks. This is driving demand for re-architected storage systems and ultra-fast networking infrastructure. In practice, facilities need to integrate more sophisticated interconnects, edge caching, and high-throughput storage arrays to keep GPUs fully utilised.

These requirements have clear implications for stakeholders across the ecosystem:

- Operators: High-density deployments require rethinking cooling systems, rack design, and facility layouts.

- Investors: Projects are becoming larger and more complex, with higher capex and extended delivery timelines.

- Real estate developers: Land parcels must be evaluated not just for size but also for power availability, cooling potential, and regulatory feasibility.

- Suppliers: Demand is rising for advanced chips, networking equipment, and immersion or liquid cooling solutions.

- Advisory Firms and Consultants: Need to advise clients on how to integrate AI-native infrastructure into broader digital strategies.

The UK as a Hub for AI Investment and Infrastructure Growth

The UK has become a focal point for AI investment. Microsoft has pledged billions for AI infrastructure, Nvidia is promoting the idea of AI “factories,” and government initiatives such as AI Growth Zones are shaping the country’s ambitions.

Policy support is strong, but challenges remain. Energy grid capacity, planning restrictions, and sustainability expectations could slow the pace of development.

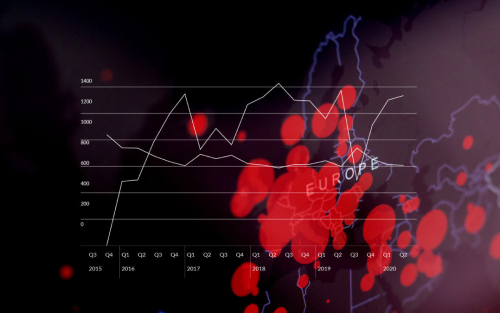

According to DC Byte’s latest data, 6.7 GW of pipeline capacity is currently tracked in the UK, compared to 46 GW across Europe. This highlights both the UK’s emerging role and the limitations of scale relative to other markets.

For operators and telcos, this means competing for early access to grid connections. For investors, it means balancing government ambition with real-world constraints. For suppliers and real estate advisors, it means anticipating where infrastructure bottlenecks will drive new opportunities.

According to Charlie Enright, Senior Analyst at DC Byte, “Government initiatives such as AI Growth Zones are creating real momentum, and with continued progress on grid availability and planning permissions, the UK is well placed to capture a meaningful share of AI investment and translate policy ambition into long-term growth.”

Business and Market Impacts

The rise of neoclouds has the potential to rebalance the cloud market. Hyperscalers still dominate, but neoclouds and regional specialists are carving out share by offering faster access to GPUs and more flexible service models.

Enterprise adoption of AI is accelerating this demand. As organisations move from experimentation to production, they need platforms that can support intensive training and large-scale inference. This is creating an ecosystem shift, where chipmakers, operators, and software stack vendors form new partnerships to serve enterprise AI needs.

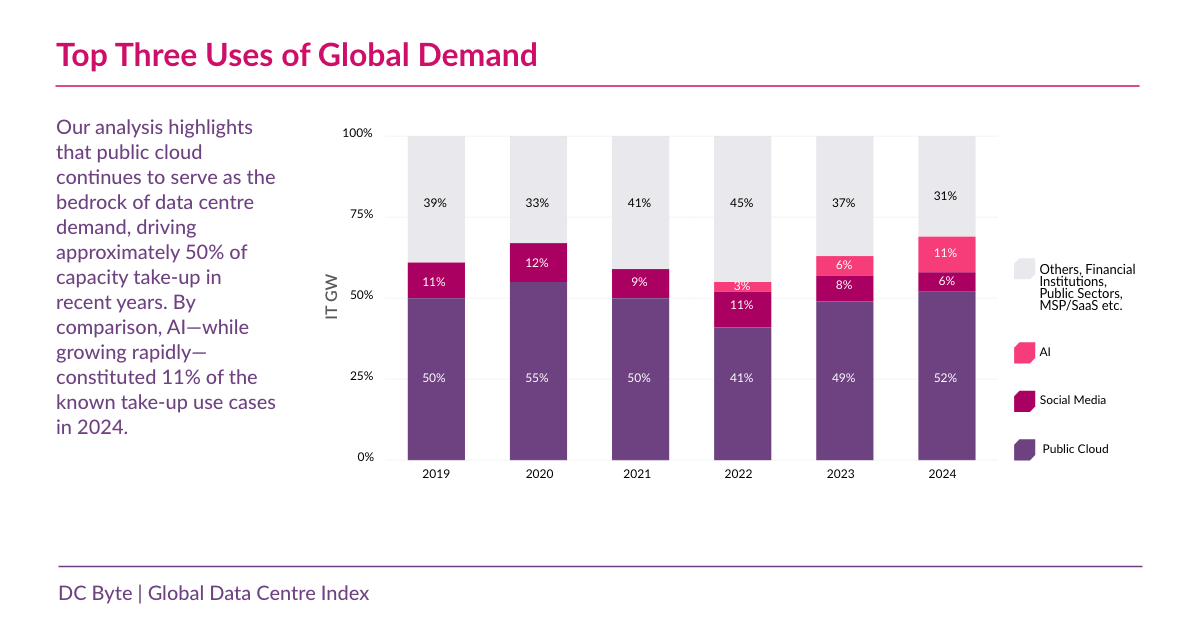

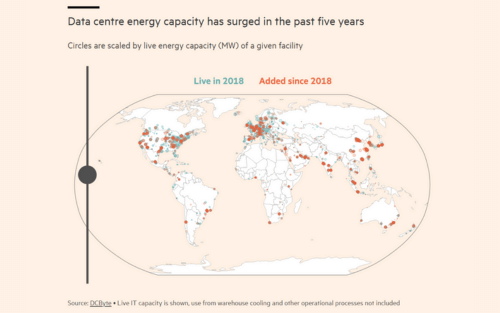

Our Global Index 2025 data highlights AI-related capacity as one of the fastest-growing segments. It made up 11% of global take-up capacity

Venture and corporate investment in AI infrastructure has surged, with global spending on AI-focused data centres projected to grow at more than twice the rate of traditional cloud deployments over the next three years. For example, CoreWeave has committed £1.5 billion (part of a broader £2.5 billion push) in UK AI data centre investment, underlining how serious capital is streaming into the segment.

“Neocloud providers are not replacing hyperscalers, but they are reshaping competitive dynamics. By offering GPU access at speed, they are carving out early market share and attracting enterprise AI workloads. Over time, this could shift how capacity is deployed and how operators position their facilities.” Siddharth Muzumdar, Research Director

Looking Ahead: Scenarios and Strategic Plays

The future of cloud and AI is unlikely to be one-size-fits-all. Scenarios include the rise of specialised neoclouds, hybrid AI models blending hyperscaler and niche platforms, and regulatory frameworks that shape who can compete in Europe.

At DC Byte, we see clear strategic opportunities emerging:

- Operators: Position facilities for GPU density and high-performance workloads.

- Investors: Track early movers and evaluate long-term sustainability of projects.

- Real estate developers: Anticipate demand for land parcels with robust grid and cooling access.

- Suppliers: Build relationships early with Neocloud operators to align product development.

- Advisory Firms and Consultants: Guide clients through hybrid AI strategies and investment decisions.

At DC Byte, we are already tracking the pipeline projects, capacity shifts, and ecosystem partnerships that define this emerging landscape. For organisations looking to understand where AI infrastructure is headed, our data and analysis provide the clarity needed to make informed strategic decisions. Get in touch today.