February 3, 2026

Abundant, cheap RAM is a thing of the past. Across the technology stack, pricing has surged, availability has tightened, and procurement has moved from a routine task to a complex strategic hurdle. While it is tempting to label this a systemic failure, that oversimplifies the reality.

The market is shifting its memory priorities toward the workloads that are growing fastest. AI training and inference are pulling the centre of gravity away from general-purpose compute. In practical terms, memory has moved from a background component to a front-line input that shapes sequencing, timelines, and competitive positioning.

Beyond component costs, this shift changes how infrastructure teams plan performance, how procurement teams de-risk supply, and how investors separate deliverable advantage from headline ambition.

The Strategic Pivot: DDR5 to HBM

The common misconception is a lack of factory capacity. The real constraint, however, is the shift in production focus. The memory industry is amid a deliberate move away from DDR5, the backbone of consumer and general-purpose servers, toward High Bandwidth Memory (HBM). HBM has become essential infrastructure for AI data centres.

Retooling production lines for HBM requires a “structural leap” in how memory is allocated. The manufacturing effort is more specialised, the packaging more complex, and downstream demand more concentrated among a small number of large buyers.

HBM also changes the unit economics of supply. Because HBM consumes significantly more wafer capacity than traditional RAM, every stack produced for an AI data centre directly reduces the supply available for the rest of the market.

The “Crowding Out” Effect

The scale of AI demand has magnified this shift. Large-scale operators are now securing supply through massive, multi-year agreements that effectively shield them from market volatility.

The impact is clear: when a single project like OpenAI’s “Stargate” infrastructure is estimated to absorb nearly 40% of global memory supply, it creates a crowding-out effect. Consumer hardware vendors and enterprise IT teams are left competing for the remaining share of the market, facing higher premiums and extended lead times.

Memory procurement is no longer something pushed to the end of a deployment cycle. As one of the key determinants to whether a build-out lands on schedule or delays, it creates separation between markets and players that can reliably secure inputs and those that cannot. In the near term, that separation shows up as lead times and pricing. Over a longer horizon, it shows up in which plans translate into delivered capacity.

Efficiency: The New Hardware Refresh Cycle

As memory becomes a specialised, high-cost asset, the traditional two-year hardware refresh cycle comes under pressure. Operators can no longer rely on “just-in-time” hardware upgrades to solve performance bottlenecks.

Instead, we are seeing a shift in mindset toward utilisation efficiency:

- Sweating the assets: Extending the usable life of existing infrastructure becomes a first‑order lever, not an afterthought. The focus will be on maximising the value per gigabyte of committed capacity over a longer horizon.

- Software rigor: Applications must be efficient by default. The hidden cost of memory‑inefficient design, formerly masked by cheap RAM, now shows up as constrained throughput, delayed delivery, and budget reallocation to scarce components.

- Risk pricing: As noted by analysts at DC Byte, the margin for error in capacity planning is shrinking. Infrastructure risk is now being priced based on availability and delivery certainty.

HBM strengthens the logic behind this shift because it is tied to specific workloads and performance profiles. That pushes infrastructure teams to match architecture to demand more carefully. Overprovisioning becomes a far more expensive habit when the underlying inputs are constrained and specialised.

Operationally, HBM is positioned as more efficient for AI workloads, including lower energy usage that translates into less heat generation over time. In high-density environments, that efficiency can support sustained performance and reduce the operating penalty that comes with thermal constraints. In a world where power and cooling already shape delivery timelines, these affect the effective use of capacity once online.

“What we are seeing is not a technology constraint in isolation, but a change in how infrastructure risk is being priced. As AI reshapes hardware priorities, the margin for error in capacity planning is shrinking.” – Jason Zhou, Research Analyst, DC Byte

What This Changes for Infrastructure Decisions

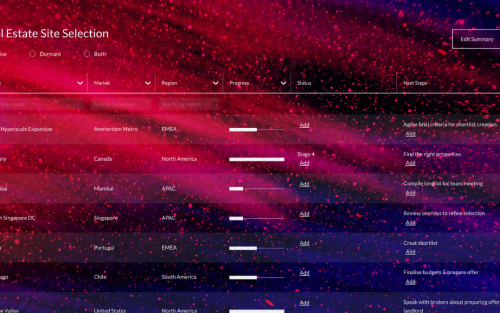

These changes in production focus and its downstream effects have resulted in shifts in decisions upstream for various stakeholders. These include areas such as architectural design, deployment planning, and delivery sequencing.

- For operators, access to hardware is no longer the primary constraint. When memory architecture are more workload-specific, reversals and redesigns become harder to absorb late in the cycle. This changes how platform choices are evaluated, how deployments are staged, and how expansion is timed. It also increases the cost of mismatch.

- For investors, pipeline size and headline announcements are becoming weaker indicators of value, as execution certainty and delivery timing increasingly determine returns. In a constrained environment, the ability to secure inputs, deploy on schedule, and operate efficiently can matter more than the size of a forward-looking pipeline.

- For suppliers, bbroad-based volume strategies are losing ground to specialised, AI-aligned demand, where value is defined by precision and integration rather than scale. The market rewards suppliers that can support high-intensity deployments with tighter specifications, more complex integration, and fewer tolerance points for delay. Suppliers positioned purely for commoditised demand face more price pressure and less predictability.

Across the ecosystem, decisions that were once correctable in the next refresh cycle are now harder to unwind, raising the cost of misjudging location, timing, and delivery capability.

Looking Ahead: The Foundations of 2026 and Beyond

The current market friction is best understood as growing pains. The industry is investing in the next generation of memory architecture that will expand performance and improve efficiency, with ongoing momentum around newer HBM iterations.

By the time additional supply comes online, the industry will have adapted its approach. Software will be leaner, infrastructure will be more purpose-built, and hardware will be deployed against real workloads rather than theoretical abundance.

The end of cheap RAM marks a transition to an era where performance is driven by architectural sophistication. For decision-makers, the question is which organisations adjust their planning quickly enough to stay ahead of the constraint.

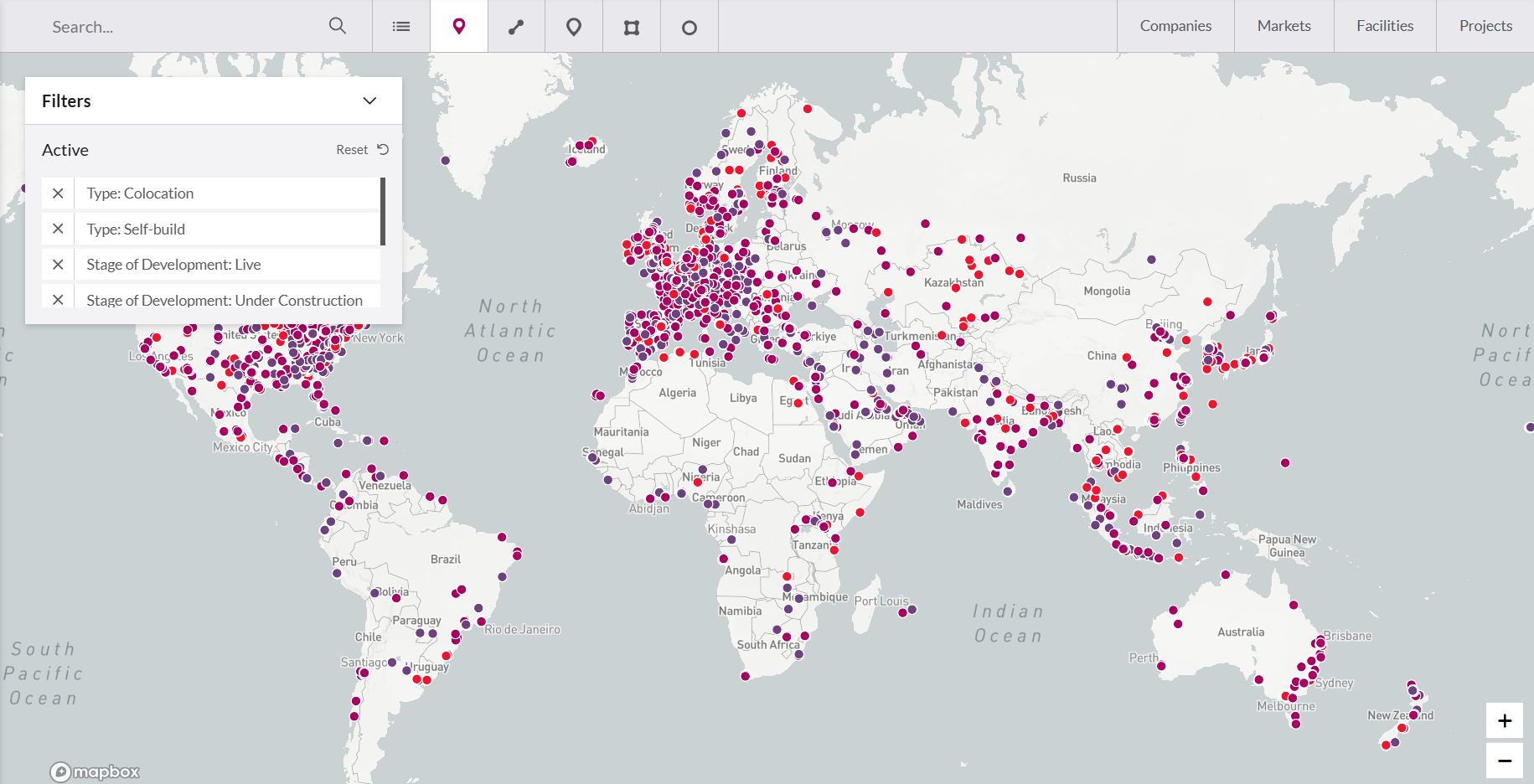

If your planning depends on separating announced capacity from deliverable capacity, you need better visibility on data centre markets, not bigger bets. Book a demo with our team to explore our Market Analytics, where we capture global data centre capacity by market and development stage.